Last week the guys at fal.ai released a new version of their upscaler model, AuraSR. A few days after that, Jeremy Howard and the answer.ai team released FastHTML, which is a way to easily make frontends for webapps in python (an idea I had also started working on, but abandoned when I saw that answer.ai was already building it). I wanted to put the two together and see how both of them performed.

For building web apps like this, I usually try and lean as heavily as I can on LLMs, just describing the logic that needs to be implemented and letting it go from there. I initially tried this approach with Claude Sonnet 3.5 and DeepSeek V2 Coder, and without FastHTML. Both models were unable to produce a working app with all the features specified. I then tried it again, this time using FastHTML (and only on Claude 3.5, sorry DS, but your model is just too slow. One day together.ai will host the model for you.). Because FastHTML is a brand new library, the question then becomes how do we get the AI to be able to use it properly. Thankfully, the team had thought ahead of this, and have a file in their docs that is meant to be added to the prompt that tells the AI how to use the library. By adding that to the prompt and slightly modifying the instructions, the model was able to one shot creating the app, and then was also able to add in few extra features that I wanted as well in follow ups.

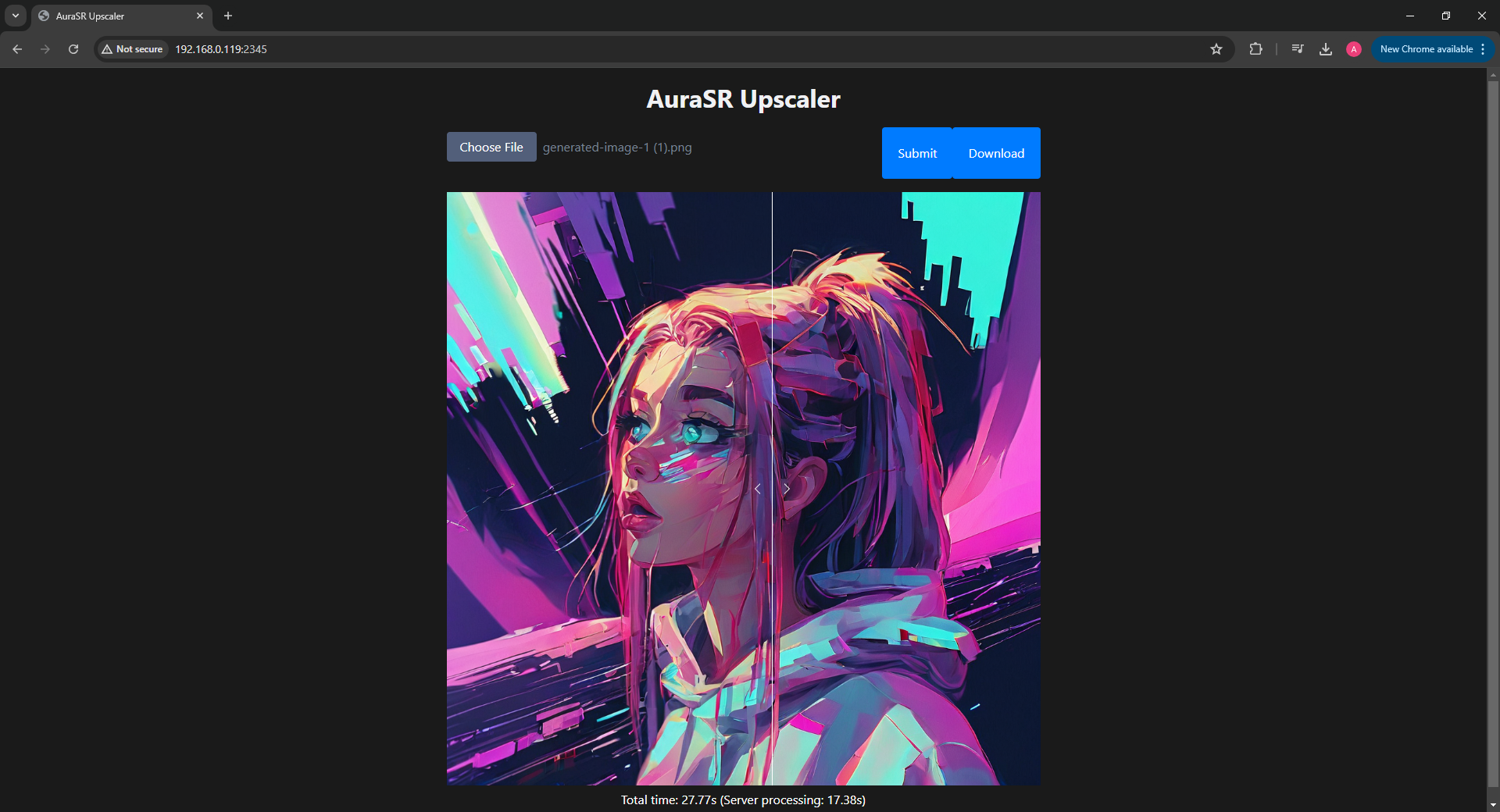

Figure 1: The final site

As for AuraSR, it did what it says it does: it increases the resolution of the image. It doesn’t perform any miracles, if a part of an image looked a little weird before, whether it was a little blurry or there was some aliasing, it will still be there after, except now it will be a little weird in 4x the resolution!

If you want to see and run the code yourself, or look at the prompt that was used to make it, you can see it here.